A New Website for the Cincinnati Public Library

Oct 22 – Dec 9, 2018

DEMONSTRATED SKILLS FOR THIS PROJECT

- Write a Project Proposal that describes the website’s current state and includes a project plan

- Provide timely peer feedback and iterate based on feedback received

- Interview stakeholders

- Conduct field research and a literature review

- Define key personas based on key user goals and tasks

- Create a matrix of prioritized tasks for each persona

- Perform content analysis to decide what to keep, delete, revise, or add new

- Choose a primary classification scheme

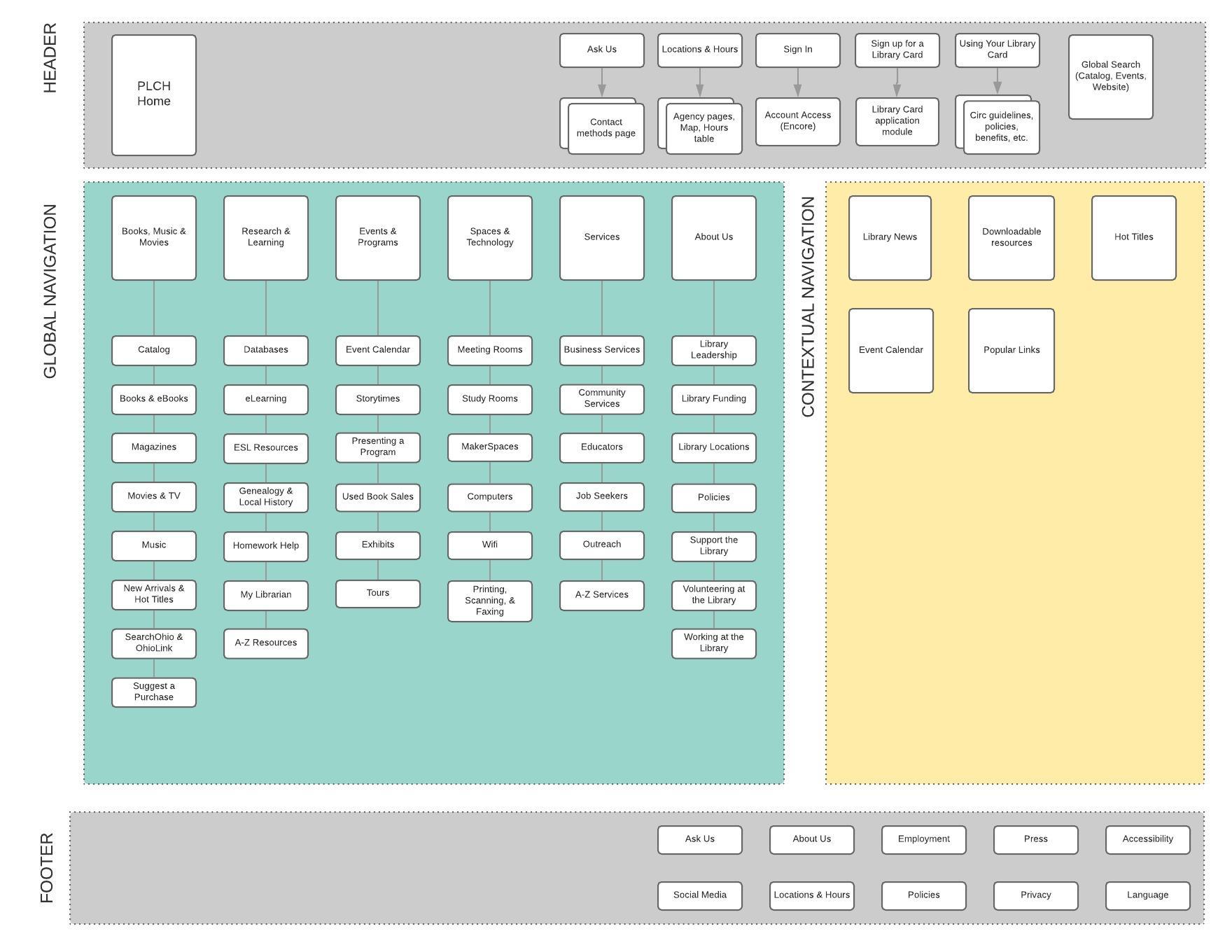

- Create a Sitemap that includes labeling, taxonomy, and access points

- Assess labeling and taxonomy with remote user testing (Treejack), and make changes based on results

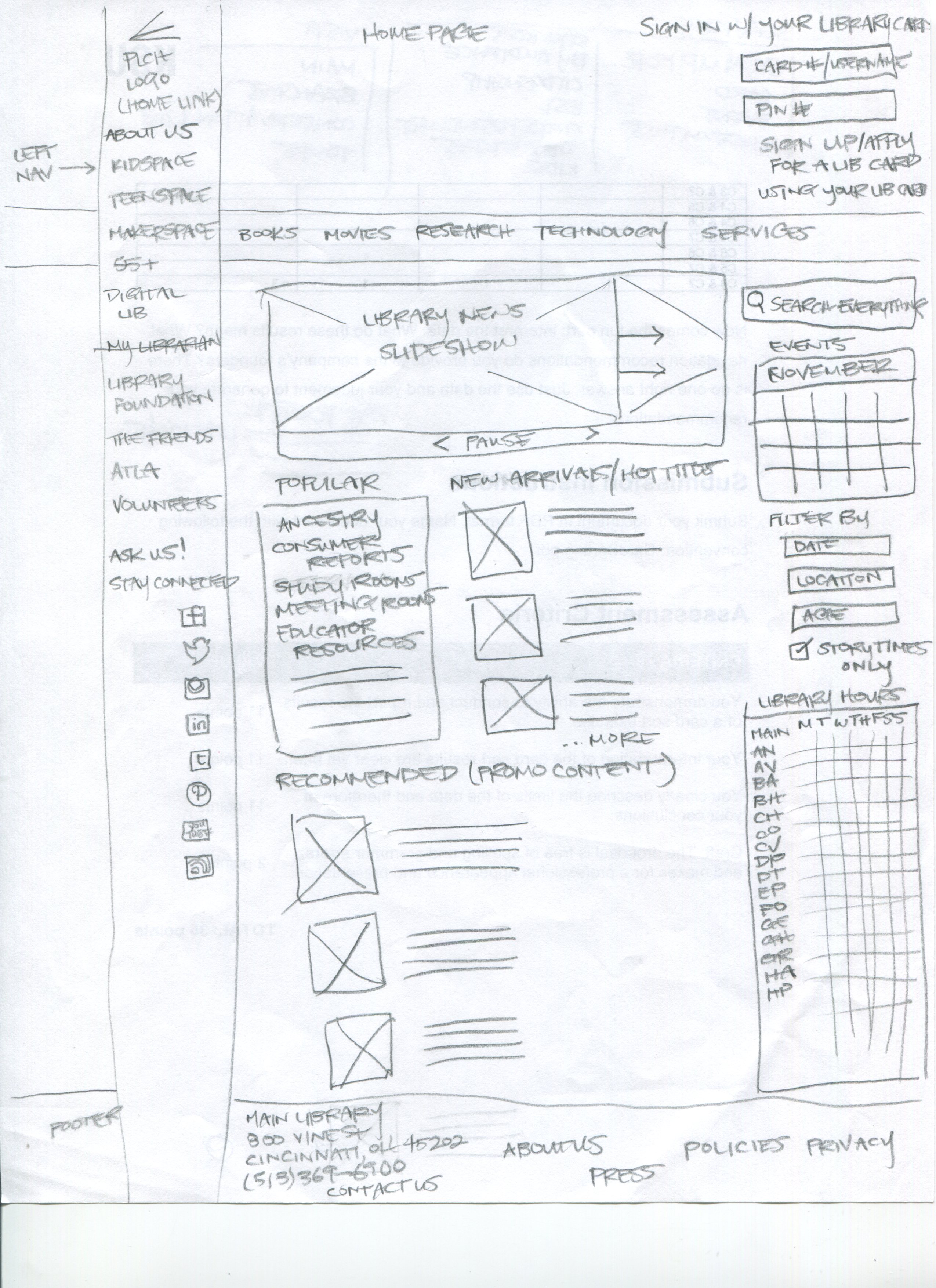

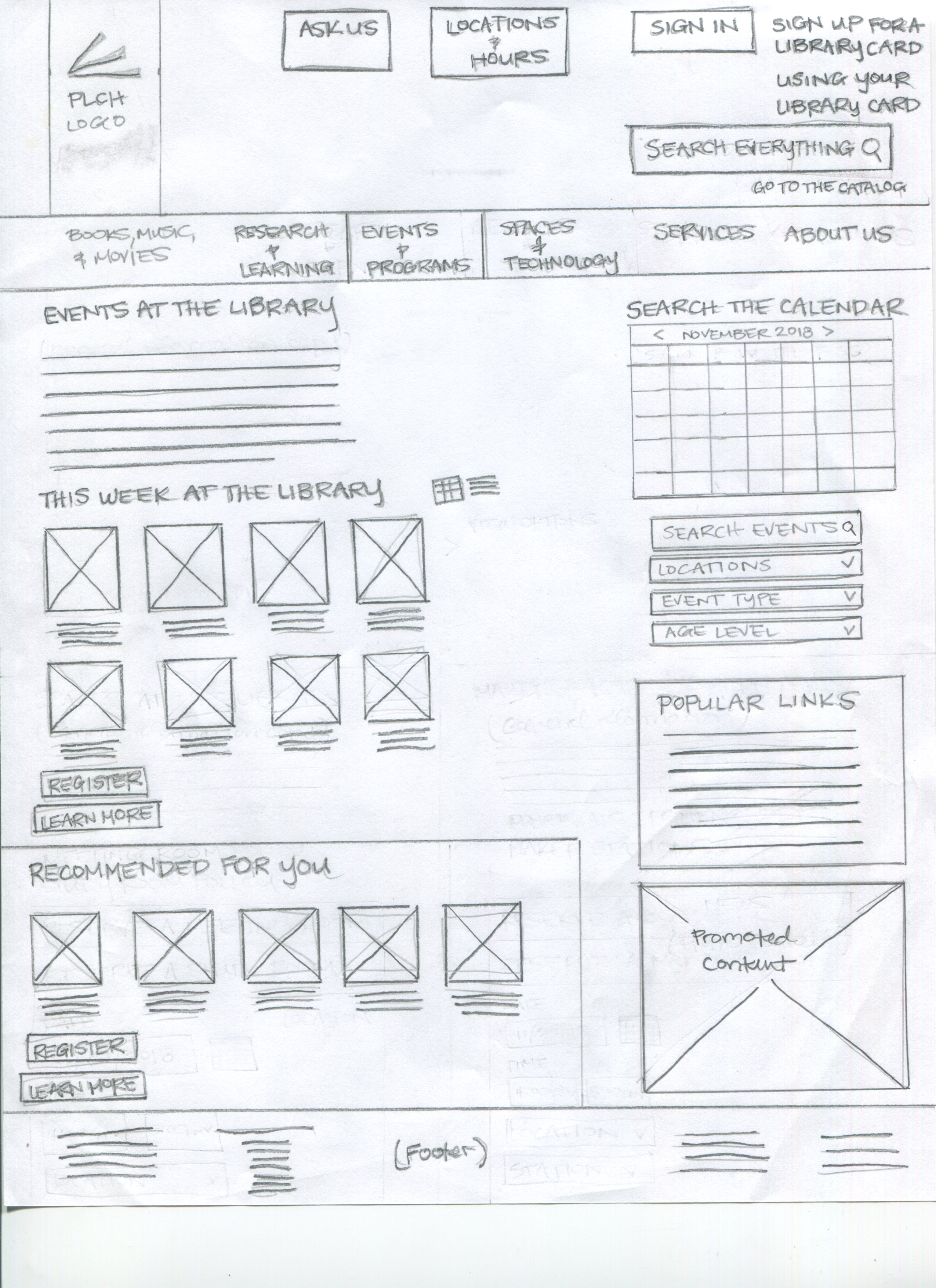

- Sketch paper wireframes of homepage and key workflows

- Revise wireframes based on team feedback

- Test navigation based on key user tasks (Chalkmark)

- Evaluate results and report findings in a final deliverable

Scenario

When my Information Architecture class presented an opportunity to re-imagine a public-facing library website from the ground up, I was thrilled to take on the challenge. Having worked at the Cincinnati Public Library (PLCH) for many years, I was able to use my existing domain knowledge and connections with current stakeholders to enrich my research and findings.

My Process

Project Proposal

As part of its 5-year strategic plan, the Cincinnati Public Library needed a more dynamic, mobile-friendly web presence that would better meet the rapidly evolving needs of its customers.

Operating in the role of an independent UX consultant, the first thing I did was create a Project Proposal for the client, which discussed:

- my interpretation of the client’s stated needs, particularly more findable and understandable content as well as a more up-to-date look and feel.

- an initial assessment of the site’s current state.

- a project plan that detailed the scope, activities, and timeline for the redesign.

Understanding Library Users and Context

My next step involved both field research and a literature review. I reviewed several artifacts, including:

- the Library’s Strategic Plan

- Annual Cardholder Survey

- Customer Clusters

- recent customer feedback

I then conducted interviews with 3 Library stakeholders to better understand their business objectives and vision for the Library’s digital information space. Particularly, I wanted to know more about:

- Their role with respect the the Library’s web presence.

- What qualities they want people to attribute to the Library and its public website.

- How the Library’s website fits into its overall strategy.

- Who their users are now and who they want their users to be in 5 years.

I also spoke informally with current library users and staff about their perceptions of the Library’s website and observed their information seeking behaviors. Finally, I used my observations, interviews, and domain knowledge to prioritize user tasks and construct personas.

Supporting User Tasks

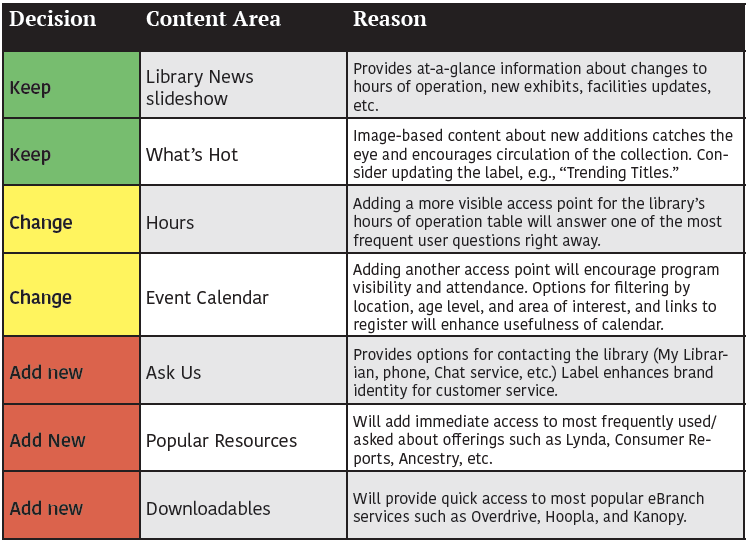

I created several artifacts which formed the building blocks for the Library’s new information architecture.

User Testing with Treejack and Chalkmark

Using Treejack software, I recruited 4 Library users for remote, unmoderated testing of the site’s labeling and information organization. Based on my own analysis of the results and feedback from classmates, I revised my sitemap to better reflect users’ mental models.

Next, I represented my labeling and taxonomy with several wireframes, which were loaded into Chalkmark for testing with 4 additional users. After analyzing the results, I iterated my designs one last time before presenting them to the client.

Project Results

Validated by data from user testing, the Cincinnati Library’s new site design addressed the client’s stated problems in several ways:

- A true global Search that integrates site and catalog content for a more seamless experience.

- More usable Event, Space, and Technology reservation processes that match user expectations and workflow behaviors.

- More extensible taxonomy and label structures that better support information finding.

- Structured content that is more pertinent to users and creates a responsive experience for different devices.

Lessons Learned

- 3 out of 4 users vs 75% of users. Results of tests with a small number of participants should be reported as numbers rather than percentages. This more accurately represents the sample size and avoids confusion about success rates.

- “Ain’t nobody got time for all that.” Including just enough detail to understand the message of written artifacts reflects clarity of thought and demonstrates to clients that you respect their time.

- Labels should reflect the language of your users. This is critical to support user tasks and discoverable content. No one but librarians will ever refer to the Catalog as an OPAC.