If there is one overriding lesson I’ve learned from this week’s design assessment, it is to know the capabilities and limitations of your chosen recording method, and prepare accordingly. I used my iPhone 5 to record my 4 test sessions, which worked just fine for two users. However, the limitations of my phone storage and my own ignorance about video formatting led me to break up two user sessions into 3 videos each, resulting in an uneven set of 8 videos. Since the default setting on my phone’s camera is to record video at 130 MB with 1080p HD at 30 fps per minute of footage, I ended up with sessions that ranged from about a GB to approximately 4.5 GB in size. This became especially problematic when I tried to import the videos to my iMac, and then slowed my progress to a teeth-grinding crawl when I attempted to upload them to Dropbox. I didn’t even try to upload them to Kent’s website. Doing anything with a 4 GB video on an early 2008 iMac (even one with added RAM) is like watching hair grow.

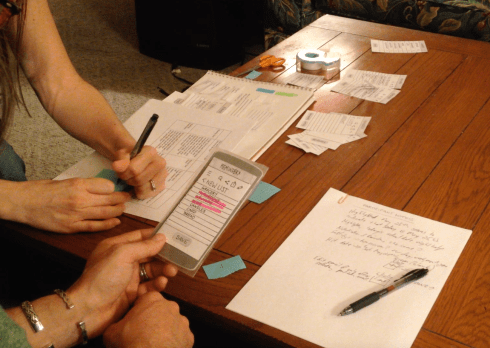

Regarding conducting recorded design assessments, I have learned that it is best to arrange for someone to operate the recording equipment, so you can focus on moderating your tests. Luckily, I was working with some cool cats who were very understanding about my lack of expertise. Next time, I would also be sure to angle the camera so that viewers can see users’ facial expressions and body language as well as the test materials with which they would be working. Despite my painstaking preparation of test materials and planning scenarios, I frequently encountered unexpected user behavior during testing. When my participant tapped on something I hadn’t designed a screen for, I had to pause to figure out whether or not I could move forward with the next screen, or stop and ask them what else they might tap on to get the same result. I tried not lead my test subjects, but this became very difficult at times when they seemed to be missing obvious buttons, or when they tapped something that seemed illogical to me. When this happened, I often asked users to describe what the different options and icons on the screen meant to them, which helped tease out their thought process for using the app. This not only gave me insight into improving the app’s usability, but also helped the user navigate through the screens more fluidly.

Human behavior is unpredictable. I know this intellectually, but encountering it in a test session over which I expect to have a modicum of control can throw me completely off-kilter. When the interview is interrupted by dogs and cats and phones ringing, I struggle to maintain my train of thought. When my test subject suggests that my stakeholder might have an ulterior motive to steal my design ideas, or suggests changing list items from mushrooms and gruyere to Funyuns and Velveeta, I have to laugh even though I feel like I should probably hit the reset button on the interview. As yet, I lack the wisdom to know when surrendering the interview to a participant’s stream of consciousness will help guide the design, or when I should interrupt the conversation to maintain focus and manage time. Despite my many errors, I guess the silver lining is that at least the users felt comfortable enough with the test and its moderator to reveal their unfiltered thoughts.